{tocify} $title={Table of Contents}

Details about creating Linked Service and DataSet is provided below in Steps to create pipeline section

For that you provide the Server address, Database Name and the credential. And finally click on Test Connection to confirm all ok.

Search for SQL and select SQL Server, provide the Name and select the linked service, the one created for connecting to SQL

ForEach works on array, it's input. Here it is termed as Items , provide following against it

iii. Against Query - provide the query, here we want all data from the current Table

Introduction

There are many file formats supported by Azure Data factory like

- Avro format

- Binary format

- Delimited text format

- Excel format

- JSON format

- ORC format

- Parquet format

- XML format

Each file format has some pros and cons and depending upon the requirement and the feature offering from the file formats we decide to go with that particular format.

One of the most used format in data engineering is parquet file, and here we will see how to create a parquet file from the data coming from a SQL Table and multiple parquet files from SQL Tables dynamically.

What is Parquet file format

Parquet is structured, column-oriented (also called columnar storage), compressed, binary file format.

It is opensource, and offers great data compression(reducing the storage requirement) and better performance (less disk I/O as only the required column is read).

It contains metadata about the data it contains(stored at the end of the file)

Note: Binary files are a computer-readable form of storing data, it is not a text file rather it stores data in the form of bytes.

Create parquet file from SQL Table data

If you are beginner then would ask you to go through - Getting Started with Azure Data Factory

To do this we simply make use of copyData activity in a pipeline and provide the details of source (i.e. which Table to refer) and sink (i.e. ADLS Gen 2 folder location).

Details about creating Linked Service and DataSet is provided below in Steps to create pipeline section

This is great for single Table, what if there are multiple tables from which parquet file is to be created?

You can say, we can use same pipeline - by just replacing the table name, yes that will work but there will be manual intervention required.

And what if there are hundred's and thousand's of table?

How parquet files can be created dynamically using Azure data factory pipeline?

In order to create parquet files dynamically, we will take help of configuration table where we will store the required details.

This configurations can be referred at runtime by Pipeline with the help of LookUp activity, the output of this activity can be used to dynamically provide the settings value, thus no hardcoding required in the pipeline.

So same pipeline can be used for all the requirement where parquet file is to be created, just an entry in the configuration table is required.

Let's see below, how to do it...

Steps in creating pipeline - Create parquet file from SQL Table data dynamically

Source and Destination connection - Linked Service

To create a connection, Click on Manage tab from the left pane.

On the Linked services page, select +New to create a new linked service. Provide name and select the integration runtime

Here the source is SQL database tables, so create a Connection string to this particular database.

Note : Alternatively and recommended way is to make use of Key Vault

Now, create another linked service for the destination here i.e., for Azure data lake storage

Using this linked service, ADF will connect to these services at runtime. Next is to tell ADF, what form of data to expect.

Define the structure of the data - Datasets

Two datasets is to be created one for defining structure of data coming from SQL table(input) and another for the parquet file which will be creating (output)

Select Author tab from the left pane --> select the + (plus) button and then select Dataset.

Search for SQL and select SQL Server, provide the Name and select the linked service, the one created for connecting to SQL

Add a parameter called TableName and in connection tab provide following against Table - @dataset().TableName

We will make use of parameter, this will help us in achieving the dynamic selection of Table.

Now search for storage and select ADLS gen2, Parquet as format, give the Name and select the linked service

container

folder

filepath

this will help us in achieving the dynamic creation of parquet file.

In connection tab add following against File Path

@dataset().container/@dataset().folder/@dataset().filepath

Configuration Table

Using this table we will have some basic config information like the file path of parquet file, the table name, flag to decide whether it is to be processed or not etc. (more columns can be added as per the need).

This table will be referred at runtime and based on results from it, further processing will be done.

Designing the Pipeline

The purpose of pipeline is to get data from SQL Table and create a parquet file on ADLS

i. Lookup Activity

The fist step where we get the details of which all tables to get the data from and create a parquet file out of it.

- Place a lookup activity , provide a name in General tab.

- Under Settings tab - select the dataset as DS_SQLServer , provide the table name as config (the one created in above step), select user entry as Query and provide below against the Query

select * from config where TobeProcessed = 1

Here basically we are fetching details of only those objects which we are interested(the ones having TobeProcessed flag set to true)

So based on number of objects returned, we need to perform those number(for each) of copy activity, so in next step add ForEach

ii. For each activity

@activity('ConfigLookup').output.value

Basically we are giving it the output of ConfigLookup activity, so it will iterate through it . And in each iteration we need to perform the act of creating parquet file and for that we add CopyData activity in ForEach.

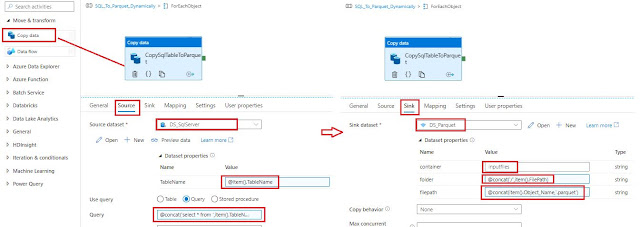

iii. CopyData Activity

- Select Copy data activity , give a meaningful name.

- On Source tab

i. Select dataset as DS_SQLServer, as soon as you do it - you are asked to provide value against parameter TableName, provide below

@item().TableName

ii. Select Query against Use Query

iii. Against Query - provide the query, here we want all data from the current Table

@concat('select * from ',item().TableName)

Question might come in your mind, where did item came into picture?

Here item is current object/record of the ForEach items array.

In previous step, we had assigned output of lookup activity to ForEach's Items thus if lookup activity returned 3 records then Foreach items will have 3 records and it is referred as item in it.

Thus you provide the value which is in the current iteration of ForEach loop which ultimately is coming from config table

- On Sink tab

That's it, pipeline is ready to use.

Conclusion

We got a brief about a parquet file and how it can be created using Azure data factory pipeline . And in a scenario where there is need to create multiple parquet files, same pipeline can be leveraged with the help of configuration table .

Thus the pipeline remains untouched and whatever addition or subtraction is to be done, is done in configuration table.

It is a design pattern which is very commonly used to make the pipeline more dynamic and to avoid hard coding and reducing tight coupling.

If you have some better idea or any suggestion/question, do post in comment !!

Do share if you find this helpful .......

Knowledge Sharing is Caring !!!!!!

How would you go about this when the column names contain characters parquet doesn't support?

ReplyDelete