Application Gateway Error

Application Gateway Error

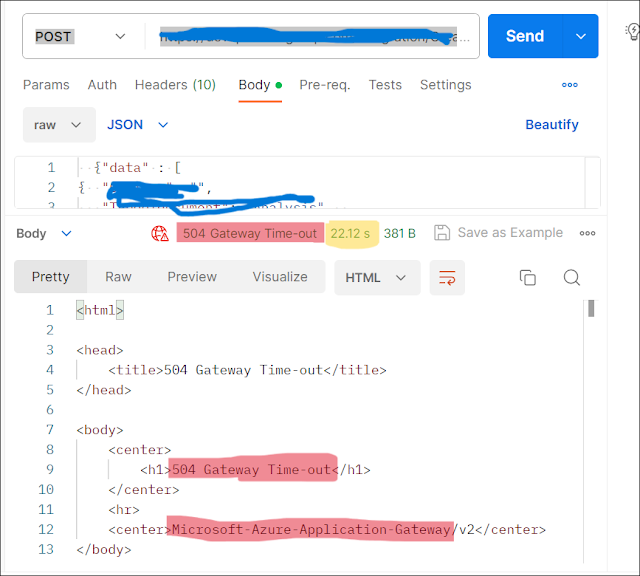

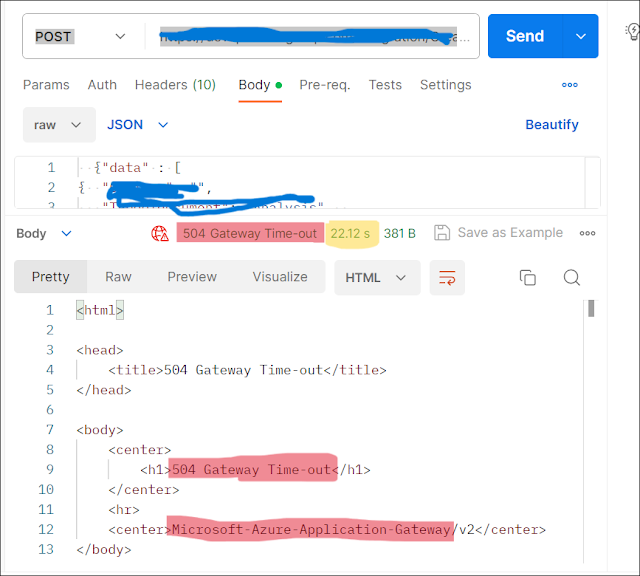

504 Gateway Timeout from Azure Application Gateway while testing Logic App

{tocify} $title={Table of Contents} Issue While testing an Interface, started get…

Application Gateway Error

Application Gateway Error

{tocify} $title={Table of Contents} Issue While testing an Interface, started get…

{tocify} $title={Table of Contents} Issue While testing an Interface, started getting following error intermittently an…

{tocify} $title={Table of Contents} Issue There was a need to connect to sharepoint in a Logic app standard workflow, b…

{tocify} $title={Table of Contents} Introduction The feature to directly import from “Create from Azure Resource(Logic …

{tocify} $title={Table of Contents} Issue While trying to import Logic app standard workflow in APIM , got below error …

{tocify} $title={Table of Contents} Introduction Azure Table storage is a service that stores non-relational structured…

Our website uses cookies to improve your experience.

Ok